Article of the Week: Cognitive skills assessment during robot-assisted surgery

Every week the Editor-in-Chief selects the Article of the Week from the current issue of BJUI. The abstract is reproduced below and you can click on the button to read the full article, which is freely available to all readers for at least 30 days from the time of this post.

In addition to the article itself, there is an accompanying editorial written by a prominent member of the urological community. This blog is intended to provoke comment and discussion and we invite you to use the comment tools at the bottom of each post to join the conversation.

Finally, the third post under the Article of the Week heading on the homepage will consist of additional material or media. This week we feature a video from Dr Khurshid A. Guru discussing his paper.

If you only have time to read one article this week, it should be this one.

Cognitive skills assessment during robot-assisted surgery: separating the wheat from the chaff

Khurshid A. Guru, Ehsan T. Esfahani†, Syed J. Raza, Rohit Bhat†, Katy Wang‡,

Yana Hammond, Gregory Wilding‡, James O. Peabody§ and Ashirwad J. Chowriappa

Department of Urology, Roswell Park Cancer Institute, Buffalo, NY; †Brain Computer Interface Laboratory, Department of Mechanical & Aerospace Engineering, University at Buffalo, Buffalo, NY; ‡Department of Biostatistics, Roswell Park Cancer Institute, Buffalo, NY; and §Henry Ford Health System, Detroit, MI, USA

OBJECTIVE

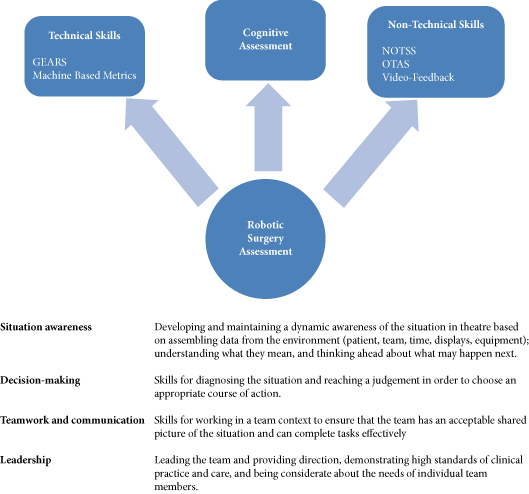

To investigate the utility of cognitive assessment during robot-assisted surgery (RAS) to define skills in terms of cognitive engagement, mental workload, and mental state; while objectively differentiating between novice and expert surgeons.

SUBJECTS AND METHODS

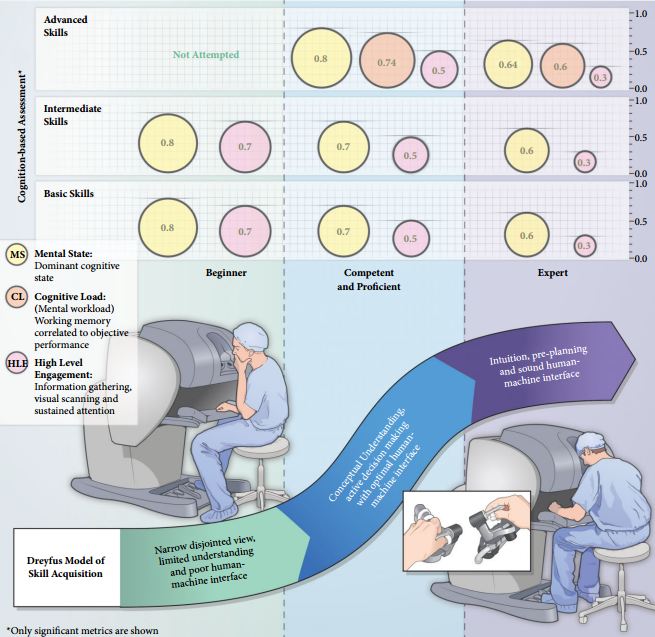

In all, 10 surgeons with varying operative experience were assigned to beginner (BG), combined competent and proficient (CPG), and expert (EG) groups based on the Dreyfus model. The participants performed tasks for basic, intermediate and advanced skills on the da Vinci Surgical System™. Participant performance was assessed using both tool-based and cognitive metrics.

RESULTS

Tool-based metrics showed significant differences between the BG vs CPG and the BG vs EG, in basic skills. While performing intermediate skills, there were significant differences only on the instrument-to-instrument collisions between the BG vs CPG (2.0 vs 0.2, P = 0.028), and the BG vs EG (2.0 vs 0.1, P = 0.018). There were no significant differences between the CPG and EG for both basic and intermediate skills. However, using cognitive metrics, there were significant differences between all groups for the basic and intermediate skills. In advanced skills, there were no significant differences between the CPG and the EG except time (1116 vs 599.6 s), using tool-based metrics. However, cognitive metrics revealed significant differences between both groups.

CONCLUSION

Cognitive assessment of surgeons may aid in defining levels of expertise performing complex surgical tasks once competence is achieved. Cognitive assessment may be used as an adjunct to the traditional methods for skill assessment during RAS.