Artificial intelligence (AI) will bring in a new wave of changes in the medical field, likely altering how we practice medicine. In a timely contribution, Chen et al. [1] outline the current landscape of AI and provide us with a glimpse of the future, in which sophisticated computers and algorithms play a front-and-centre role in the daily hospital routine.

Widespread adoption of electronic medical records (EMRs), an ever-increasing amount of radiographic imaging, and the ubiquity of genome sequencing, among other factors, have created an impossibly large body of medical data. This poses obvious challenges for clinicians to remain abreast of new discoveries, but also presents new opportunities for scientific discovery. AI is the inevitable and much-needed tool with which to harness the ‘big data’ of medicine.

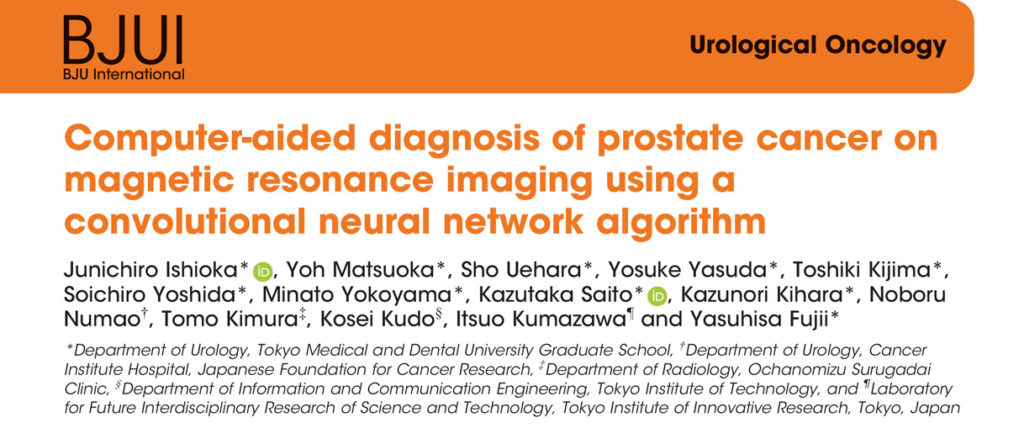

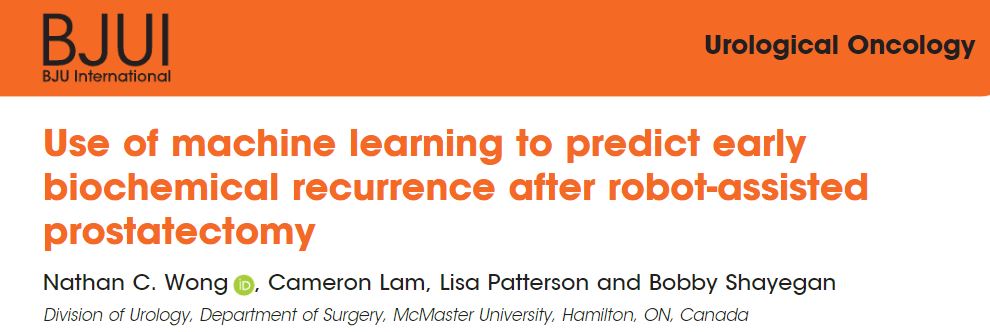

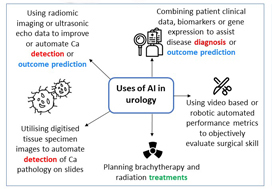

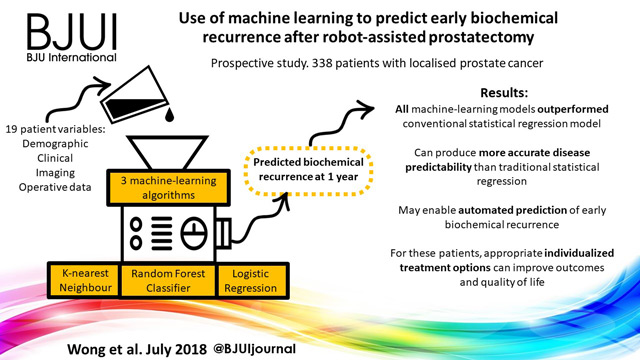

Currently, the most immediate and important application of AI appears to be in the field of diagnostics and radiology. In prostate cancer, for example, machine learning algorithms (MLAs) are not only able to automate radiographic detection of prostate cancer but have also been shown to improve diagnostic accuracy compared to standard clinical scoring schemes. MLAs can use clinicopathological data to predict clinically significant prostate cancer and disease recurrence

with a high degree of accuracy. The same has been shown for other urological malignancies, including urothelial cancer and RCC. Implementation of MLAs will lead to improved accuracy and reproducibility, reducing human bias and variability. We also predict that as natural language processing becomes more sophisticated, the troves of nonstructured data that exist in EMRs will be harnessed to deliver improved and more personalized patient care. Patient data and clinical outcomes can be analysed in short time, drawing from a deep body of knowledge, and leading to rapid insights that can guide medical decision-making.

Current AI technology, however, remains experimental and we are still far from the widespread implementation of AI within clinical medicine. A valid criticism of today’s AI is that it functions in the setting of a ‘black box’; the rules that govern the clinical decision-making of an algorithm are often poorly understood or unknowable. We cannot become operators of machines for which we know not how they work, to do so would be to practice medicine blindly.

Another barrier to incorporating AI into common practice is the level of noise in healthcare data. MLAs will use whatever data that are fed to the algorithm, thus running the risk of producing predicative models that include nonsensical variables gleaned from the noise. This concept is similar to multiple hypothesis-testing, where if you feed enough random information into a model, a pattern might emerge. Furthermore, none of the studies described by Chen et al. have been externally validated on large, representative datasets of diverse patients. MLAs trained on a narrow patient population run the risk of creating predictions that

are not generalizable. This problem has already been popularized within genome analysis, where one study found that 81% of all genome-wide studies were taken from individuals of European ancestry [2]. It is easy to imagine situations where risk score calculators or biomarkers are validated using non-representative datasets, leading to less accurate and even inappropriate treatment decisions for underrepresented patient populations. At best, MLAs that are not validated using stringent principles can lead to erroneous disease models. At worst, they can bias the delivery of healthcare to patients, leading to worse patient outcomes and exacerbation of healthcare disparities.

Chen et al. write of the possibility of AI in urology today. What about the future? Imagine a world in which computers with a robotic interface see patients in clinics, design and carry out complex medical treatment plans, and perform surgery without the aid of a human hand. This future may not be far off [3]. Or, even stranger, consider a world in which generalizable AI exists. Estimates of the dawn of this technology range, however the most optimistic projections put the timeline on the order of 20–30 years. Not far behind could be the ‘singularity’, a moment when technological advancement occurs at such an exponential rate that improbable scientific discoveries happen almost instantaneously, setting off a feed-forward cycle leading to an inconceivable superintelligence.

The future is, of course, hard to predict. Nevertheless, AI and the ensuing technology will certainly transform the practice of urology, albeit not without significant challenges and growing pains along the way. The urologist of the future may look very different indeed.

by Stephen W. Reese, Emily Ji, Aliya Sahraoui and Quoc-Dien Trinh

References

- Chen J, Remulla D, Nguyen JH et al. Current status of artificial intelligence applications in Urology and its potential to influence clinical practice. BJU Int 2019; 124: 567–77

- Popejoy AB, Fullerton SM. Genomics is failing on diversity. Nature 2016; 538: 161–4

- Grace K, Salvatier J, Dafoe A, Zhang B, Evans O. When Will AI Exceed Human Performance? Evidence from AI Experts, 2017